iOS 15.2 adds new child Communication Safety in Messages feature

Apple has released iOS 15.2, bringing App Privacy Reports in Settings to see how often apps have accessed location, photos, camera, microphone, and contacts, a new Macro photo control for the iPhone 13 Pro, the new Apple Music Voice Plan, a subscription tier that provides access to music using Siri, and more.

Today’s new update also introduces Apple’s new child Communication Safety in Messages features, which includes tools to warn children and their parents when a child receives or sends sexually explicit photos.

Announced earlier in the year, Apple said it was planning several new child safety tools including automatic blurring of explicit images sent in Messages, Child Sexual Abuse Material, CSAM detection when explicit images are detected in a user’s library, and more.

Following a backlash over privacy fears relating to the tools being able to detect known child pornography and related sexual abuse material stored in iCloud Photos, that would be flagged up and reported to the National Center for Missing and Exploited Children (NCMEC) in the US, Apple announced that it would delay the launch of the new safety features after feedback from customers, advocacy groups, researchers and others.

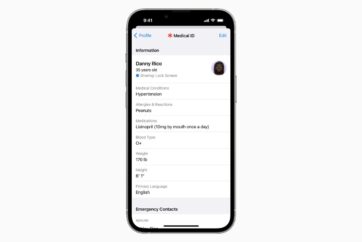

Starting today, iOS will now show warnings to children and parents when a sexually explicit image is sent or received on a device connected to iCloud Family Sharing as well as the automatic blurring of explicit images sent in Messages.

When receiving this type of content, Apple says the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. As an additional precaution, the child can also be told that, to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent, and the parents can receive a message if the child chooses to send it.

Messages uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit. The feature is designed so that Apple does not get access to the messages.

Apple is yet to announce when it will release its Child Sexual Abuse Material (CSAM) toolset which will allow the company to detect known CSAM images stored in iCloud Photos.