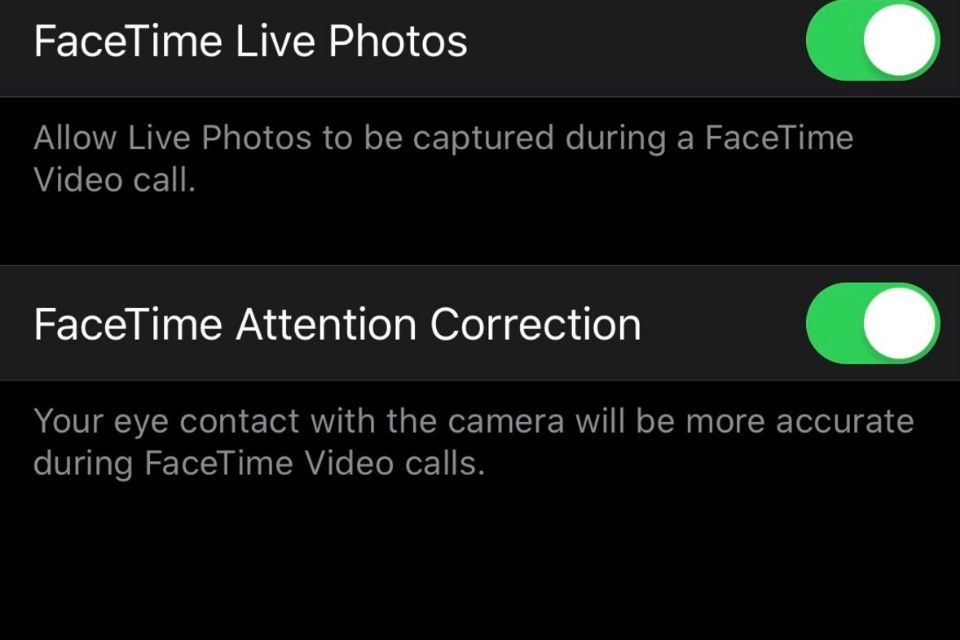

iOS 13 includes new FaceTime Attention Correction feature that uses ARKit to refocus a user’s eyes to look directly at the camera

Apple has added a new “FaceTime Attention Correction” feature within the latest developer beta of iOS 13, a feature which uses ARKit to automatically refocus and reposition a user’s eyes during a FaceTime call to make them appear as if they are looking directly into the front-facing camera of the device – allowing it to appear as if they are making direct eye contact with the camera, even when in fact their eyes are looking downwards at the live video feed of the person on the other end of the FaceTime call.

More often than not, when you make a FaceTime call you stare directly at the screen of your device to watch the other person’s live video and by doing so, you end up never looking directly into the camera, making calls less personal with the lack of eye contact during the conversation. The new “FaceTime Attention Correction” feature looks to end that by using ARKit to create a depth map of the face, then automatically reposition the eyes of the user onscreen so for the person receiving the call, it appears as if direct eye contact is being made, even when in reality it isn’t.

As shown by beta tester Will Sigmon, the new feature makes a huge difference when making a FaceTime call.

The new feature appears only to be available on the iPhone XS and iPhone XS Max and can be enabled through a new toggle in the FaceTime section of the Settings app.